What if I told you that just seven years ago, only 15% of businesses actually deployed their big data projects into production? How is that possible? 🤯

Big Data was the biggest trend of the 2010s, yet only a fraction of companies investing in the technology saw any sort of returns from these investments. According to a Gartner press release from the end of 2016, this was the truth in the 2010s. The previous year, only 14% deployed their big data initiatives into production. While there have been significant advancements in both technology and domain knowledge (cloud, distributed systems, etc.), most companies still fail to find success with data-driven insights.

The problem? More often than not, management doesn’t truly understand the complexities of becoming a data-driven organization, or how to make the most of their data teams.

In this next edition of the Operational Analytics Book Club catch-up series, we are covering the perfect book to address this issue: Data Teams: A Unified Management Model for Successful Data-Focused Teams by Jesse Anderson. This post is half of a two-part series covering the book as well as the discussion from the book club meetings for the first two parts of the book: Introducing Data Teams and Building Your Data Team.

Interested in joining a wonderful community of data professionals? Head over to this link to check out the Operational Analytics Club. The book club is simply one portion of this fantastic club; there are also workshops, mentorship opportunities, and much more.

Alright, let’s dive into the highlight lessons from the first two parts of our book. 👇

I want to preface that this book will not cover how to implement the latest and greatest technologies for data (distributed systems, ETL tools and reverse ETL tools, orchestration tools, etc). Jesse Anderson has worked with countless management teams to determine what works and what leads to failure within a data team. He wrote this book as a guide for management to better understand the pain points and sources of friction among data practitioners.

This book specifically targets C-suite members, upper management, and data team managers who want to expand their knowledge of the data space and better connect/succeed with their technical team members. So if you’re one of those folks (or hope to be someday soon), this book is for you. 🙌

After reading through the first two parts of this book, I can bucket the takeaways into two themes:

- It’s all just data, right? Wrong.

- The importance of a seasoned Data Engineer cannot be overstated.

Below, we’ll break down each of them in more detail, and explain how you can apply them for management in the data world.

It’s all just data, right? Wrong.

When upper management wants to elevate their business to the next level with data analytics, AI/ML, and data science, the majority of them are simply thinking, “Let’s take advantage of our data.”

But how much data?

Is the data considered “big data” or “small data”?

Will distributed systems come into play?

These are all questions that will not pop up to those unfamiliar with the current data landscape; one of the author’s key arguments is that management needs to have (at minimum) baseline knowledge of what comprises “big data.”

In our book club discussion, Jesse mentioned that he coined Jesse’s law: You can’t build a simple distributed system.

A distributed system could be a task that must be broken down into multiple machines or data that is stored across multiple computers. When you add distributed systems into your data pipelines, the complexity increases 10-15x which underscores the importance of management understanding the distinction between the quantity of data you have.

Another major disconnect that occurs between management and the technical members actually performing the software/data engineering and data science is that management views each data skill set as interchangeable with the next.

“We just need to hire a data scientist and then we’ll begin to drive value from our data,” is a common thought when the C-suite asks their VPs or directors to acquire a “data person.” Jesse clearly delineates the structure of a data team that will optimize an organization’s odds of success; three teams are necessary to maximize the ROI for putting time and capital into data. Here are the one-sentence definitions that Jesse gives in his book:

- Data Science: “A data scientist is someone who has augmented their math and statistics background with programming to analyze data and create applied mathematical models.”

- Data Engineering: “A data engineer is someone who has specialized their skills in creating software solutions around big data.”

- Operations: “An operations engineer is someone with an operational or systems engineering background who has specialized their skills in big data operations, understands data, and has learned some programming.”

As Jesse points out early on in Data Teams: “From a management’s 30,000-foot view – and this is where management creates the problem – they all look like the same thing. They all transform data, they all program, and so on… This misunderstanding is what really kills teams and projects.”

The idea that all data professionals are created equal has led many managers to select a data scientist as their first data hire. Bur this choice never unfolds in the direction managers expect.

Data scientists, by nature, are interested in advanced math, statistics, and the creation of machine-learning models. These individuals rarely have the software engineering experience to ingest the data needed for model creation, organize the data in a logical schema, or automate ingesting, cleaning, and transforming data.

The mistake of putting them in the first data person seat often leads to two key issues:

- The data scientist begins spending all of their time cleaning, organizing, and moving data when they would prefer to apply statistics and math to gain insights from the data

- Management feels frustrated with the lack of/slow progress achieved by the “data team”

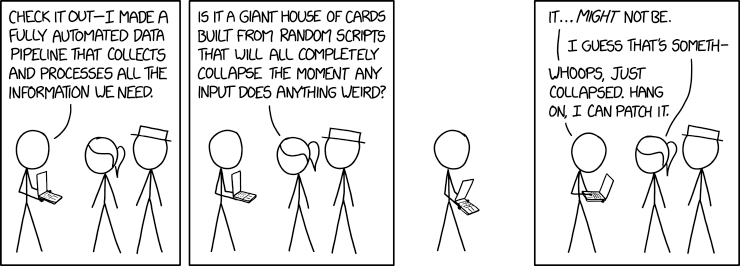

The cartoon below perfectly illustrates how I view a data team comprised of a single data scientist:

|

The importance of a seasoned Data Engineer cannot be overstated

If you’re looking for one key argument to latch onto from these first two parts of the book, here it is: Businesses will always require data engineers, as ETL tools will never scale to replace these individuals.

Having a seasoned, veteran data engineer in the infancy of a business will set the organization up for success. Data engineers possess the software engineering skills necessary to build robust data pipelines that won’t break or collapse as soon as something “weird” enters the pipeline. Given their proficiency with software engineering practices, data engineers can create products with well-built and organized code rather than code that routinely breaks due to poor design and programming logic.

But if a company makes the mistake of onboarding data engineers too late, the data engineers cannot save poorly-built data products. As Jesse explains, data teams that have DBAs or SQL-focused developers building data products without the collaboration or mentorship of data engineers will create “‘production’ system[s] held together with more duct tape and hope than anything else.”

So, what sets data engineers apart from their data scientist and analyst counterparts? Here are just a few key skills that businesses should look for in a data engineer.

Distributed systems

If your business truly needs to analyze and gain insight from “big data,” you need distributed systems. Data engineers should at least have an intermediate-level ability with distributed systems since they're fairly complex (see Jesse’s Law). Distributed systems require knowledge of resource allocation, network bandwidth requirements, virtual machine/container creation, proper dataset partitioning, and failure resolution.

Advanced programming

Many data scientists and analysts possess programming skills, but data engineers possess the skills to take programs into production. Jesse clearly explains that advanced programming is not solely understanding a language’s syntax, but also understanding how to perform continuous integration, unit testing, and best software engineering practices.

Domain knowledge

While data engineers enjoy various technical skills, they must grasp how their skills translate to business success. Data engineers must understand the domain in which their company plays in order to design robust data products that effectively solve their customers’ issues and drive business growth. The notion that all technical members must understand business goals at a high-level echoes Fundamentals of Data Engineering (our book club’s previous read).

Now that you know a few key skills required of data engineers, you can clearly see that data engineers are not data scientists. Oftentimes, management naively views data engineers as movers of data from point A to point B; this belief drastically downplays their expertise and skills. For a simple depiction of the difference between data engineers and scientists, Jesse gives a perfect visual.

|

What do managers need to understand? “Data” and the unique data practitioners within their business.

I found the first two parts of Data Teams extremely enlightening. While this book targets leaders in the data space, many of Jesse’s ideas and concepts can translate to any industry: If management doesn’t understand their employees’ struggles/pain points, distrust, and frustration will slowly bubble to the surface. I’m looking forward to diving into Part 3, which digs into the meat of the book: Managing data teams.

In reading the first portion of this book, I can safely say managers and leaders have nothing to lose by reading this book. I would not have found this book without the Operational Analytics Club; I hope to see you for our next Book Club meeting!

👉 If you’re interested in joining the TitleCase OA Book Club, head to this link. Parker Rogers, data community advocate at Census, leads it every two weeks for about an hour.