This is part one of our Product Data to Revenue series, exploring how you can turn your product data into more revenue. In each part of this series, we're revealing actionable tactics to help you generate more revenue by leveraging your existing product usage data.

More than half of SaaS companies now offer some kind of free trial or demo to potential customers. Because as Jason McClelland, CMO of Domino Data Lab, explained, free trials and freemium services help “generate interest amongst developers and data scientists, who typically don’t care about flashy marketing initiatives — they just want to try the product out.” Industry folks often refer to this strategy as “product-led.” We just call this good business. And it leads to a whole new kind of sales qualification: the PQL.

Product qualified leads (PQLs) have made their predecessors—marketing qualified leads (MQLs) and sales qualified leads (SQLs)—obsolete.

Our friend, Francis Brero, co-founder and CRO of MadKudu, explained PQLs’ superiority, saying:

“Instead of trying to persuade someone to purchase a product they’ve never used, you’re helping them get more value from a product they already love. Instead of guessing their needs from a contact form submission or the pages they’ve visited on your website, you can actually see how they’re using your product and shape your sales process to match."

PQLs are the data-driven future of SaaS industry sales, and if you’re not leveraging them, you’re ignoring your ideal customers. Here’s why you need them and how to use them.

But first, WTF is a PQL?

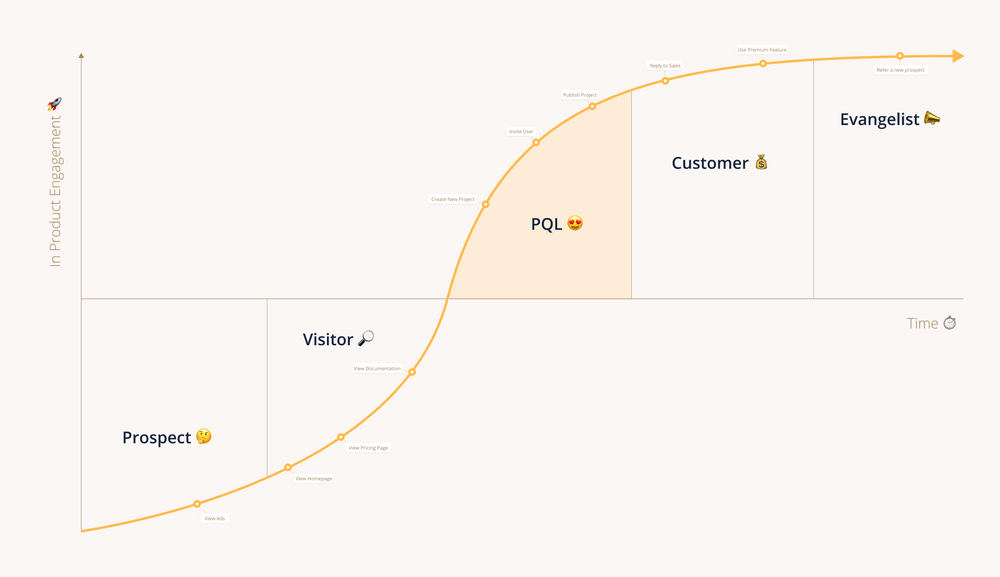

PQLs are potential customers who have already had a positive interaction with your software. They’re users of your freemium version or free trial who have achieved a specific set of qualifying actions, designed to identify users who are most likely to buy.

A Slack free user, for instance, becomes a PQL when their team reaches 2,000 sent messages. Slack founder Stewart Butterfield explained that

Based on experience of which companies stuck with us and which didn’t, we decided that any team that has exchanged 2,000 messages in its history has tried Slack—really tried it,” and, perhaps most importantly, “. . . 93% of those customers are still using Slack today.”

WorkOS, described as a provider of “developer APIs/SDKs for enterprise-ready features like Single Sign-On (SSO),” assesses external connections to identify product qualified leads. As its CEO, Michael Grinich, explained, “Our PQL could be defined as number of external connections. External connections are defined as companies that integrate SSO or directory sync with our customers’ applications using WorkOS.”

PQLs Are the MVP of Sales Leads

PQLs are the most valuable type of sales lead for two main reasons:

- PQLs convert at a higher rate. According to Kieran Flanagan, the VP of growth at HubSpot, “In B2B SaaS, PQLs (product-qualified leads) will often convert at 5x to 6x that of MQLs (marketing-qualified leads).”

- PQLs are less likely to churn once they’ve become paying customers. Bonjoro reduced its customer churn by over 60% just by switching its focus from MQLs to PQLs.

So, if you want to acquire more customers with a high lifetime value, you need to focus your marketing effort on generating PQLs and your sales teams on converting them—and say goodbye to MQLs and SQLs.

What Goes Into a PQL Scoring Model

To create a PQL scoring model, you need to begin by identifying your business’ most important metrics. You likely have an intuitive understanding of what these are— the metrics you most often report on—things like the number of active users, number of shares, or adoption of enterprise features such as SSO.

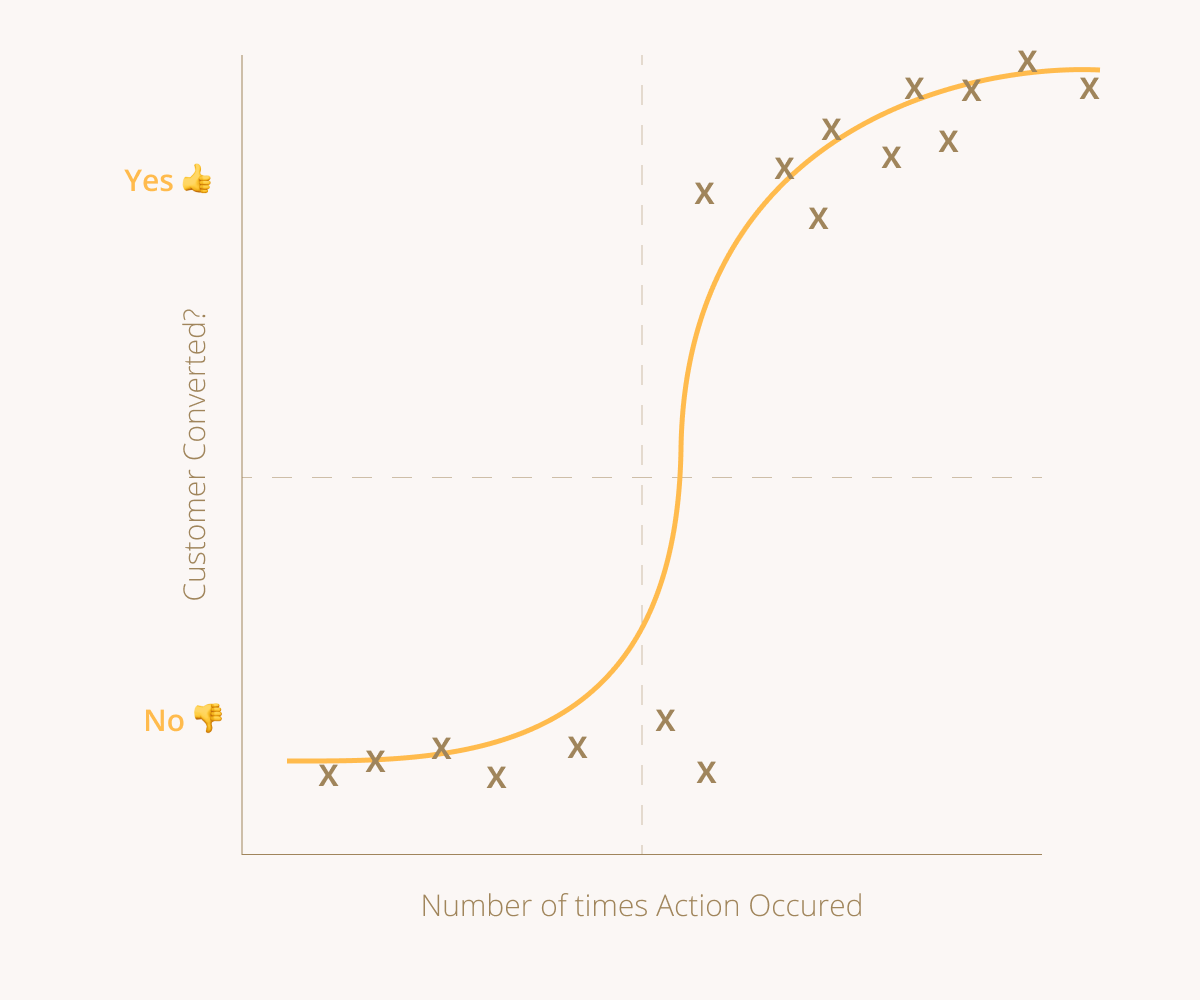

The best way to score those metrics and identify your PQLs is to use logistic regression. Your objective is to identify an action or series of actions that frequently lead to a free user becoming a paying customer.

First, form a hypothesis by considering what these actions could be, based on your unique software. Slack, for instance, included the number of messages sent as a possible indicator of a qualified lead, but they could have also included the number of emojis sent or even co-workers invited to a shared channel. If your SaaS company provides route optimization services, you may want to look at the number of stops routed per driver or the number of drivers added to an account during their free trial.

Next, review your historical data using a logistic regression model to see if your hypothesis was correct. If there’s a positive correlation between your action and conversion, your graph will look something like this:

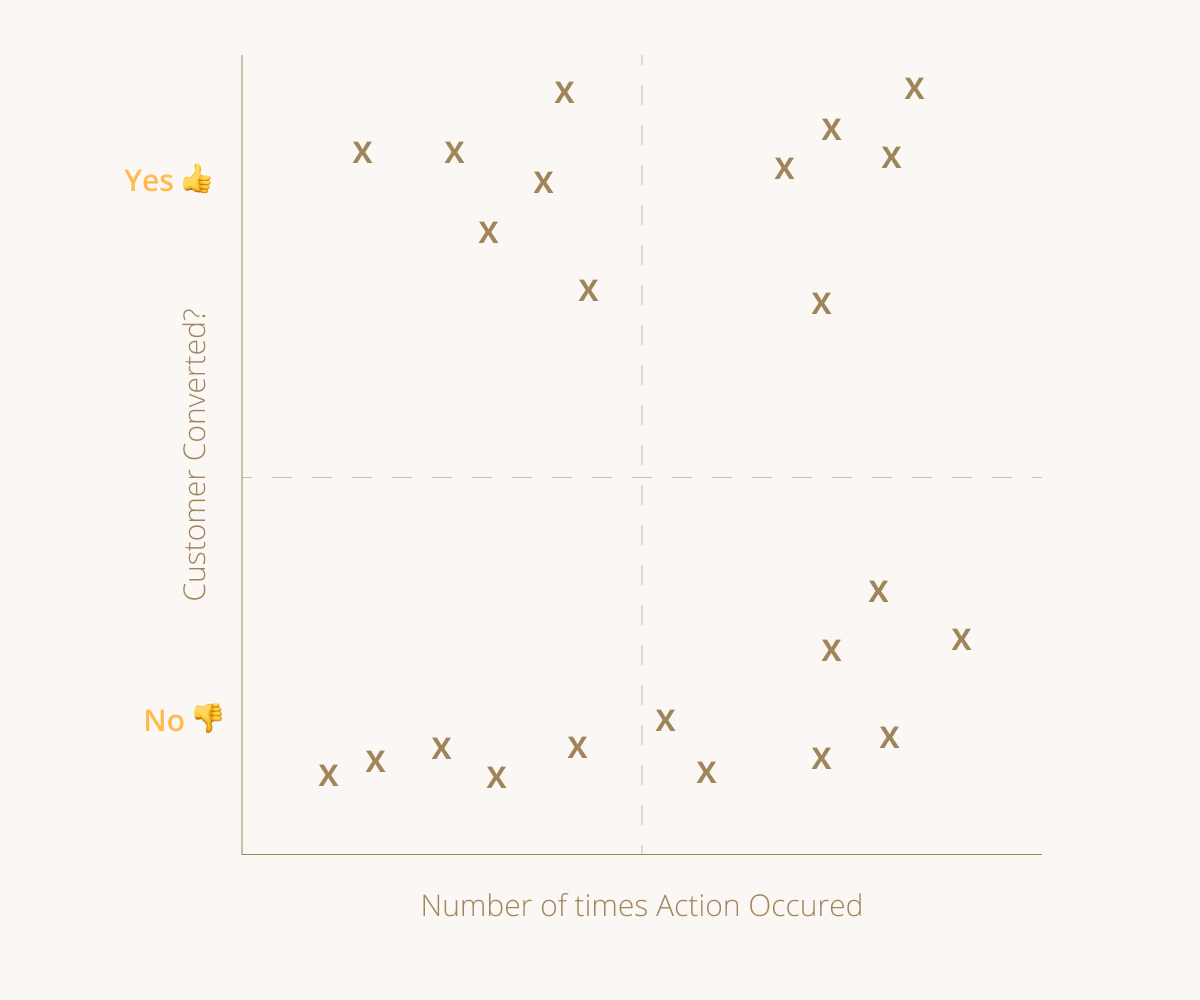

Based on this graph, when a free user performs the action in question five times, they become more likely to convert. Thus, five actions (such as messages sent or stops routed) would be your PQL. On the other hand, if your graph looks more like the one below, you can rule out this action as a potential PQL.

As you can see, this particular action has no correlation with conversions. It’s also important to note that, for some companies, PQLs are actually two separate actions completed by the same user. Slack, for instance, could have found that users who had sent 1,500 messages and started at least two shared Slack channels were most likely to see value in Slack.

How to Use PQLs in 4 Steps

Here are the essential steps to leverage PQLs:

1. Make sure you have one “source of truth” for your data

Your data architecture needs to be set up in such a way that you have one “source of truth” to ensure that you’re deriving your PQLs from quality data. Use a data warehouse like Snowflake and a hub-and-spoke model to synchronize your data without running into consistency and reliability issues.

2. Create a score on your unified set of accounts

Separate your statistical data by buyer personas and account types. Organizational context is extremely important for understanding your leads, as different types of customers may have different qualifying actions. Enterprise accounts, for example, may need to perform a certain action 100 times before they become qualified, where smaller teams and accounts may only need to perform an action 25 times.

Next, set up a lead scoring model that automatically identifies leads who are most likely to convert to sales. Read more about lead scoring strategies in The Modern Guide to Lead Qualification.

3. Route the accounts to the right place with the context provided

Apply your PQL scoring model (as explained in the previous section) to identify which free users your sales team should be reaching out to (and why). Once you’ve identified your PQLs and refined your scoring model (so you know which actions to focus on), use Census to send those PQLs to your CRM, such as Salesforce, where you can then route the PQLs to the right sales reps and trigger notifications. You can also use Census to send PQLs to your MAP (Marketing Automation Platform), such as Marketo, where you can automatically start an email campaign or sequences.

4. Tailor sales methods for each type of PQL

Now for the really fun part: Reach out to your PQLs and engage with users who already value your product in a meaningful way. Build an engagement strategy for your PQLs based on each of your buyer personas.

Consider the problem your PQL is trying to solve (the thing they’re using your software to tackle) and write messaging that speaks to that solution. Show them how or why your paid version will deliver an even better experience. Since you know how they are using your product right now, it is easy to craft a personalized messages and convert them to customers.

Get Data Where and How You Need It With Census

To leverage PQLs, you’ll need to be able to access your user data. At Census, we help companies of all sizes syncing data from their warehouse to their business tools to get their valuable user insights where and when they need them.

Book a free demo, and we’ll show you how to leverage your existing product data to build a PQL scoring model to find the gold in your existing freemium userbase.

👉 Want to see some real-world PQL use cases from the best PLG companies? Check out part two of our Product Data to Revenue series!