Did you know stakeholders’ most common complaint about data teams is “they’re just too slow”? I didn't until I surveyed 15 GTM stakeholders at Hubspot’s INBOUND conference earlier this fall.

I hunted down every go-to-market stakeholder working with a data team, so I could better understand how they feel about their data teams. In these face-to-face conversations, I asked them the following:

- On a scale of 1-10, how satisfied are you with your data team?

- What’s the most common complaint to hear about your data team?

- What’s something your data team recently accomplished that brings value to you?

- How often do you communicate directly with your data team?

I know, I know, 15 may be a small sample size, but the acceptance criteria for the surveyees was stringent. 👇

- Respondent’s company must have a data team or have 3+ data professionals in a different part of the org (finance, product, etc.)

- Surveyee must work directly with the data team as a stakeholder

- Respondent’s data team(s) must use a data warehouse/data lake/central repository of data

- The respondent’s company size had to be greater than 75 people

I required these criteria above because I only wanted to learn from stakeholders and organizations who have taken preliminary steps to become data-driven (invested in data professionals' headcount, data tools, and time investment, etc.). Additionally, without these acceptance criteria in place, I would have received countless complaints that were a result of poor decisions by organizations — and not by data teams.

Below, I’ll take a look at the key takeaways from each question, as well as some ways we, as data professionals, can solve the pain points of our stakeholders, so they’ll tell the next random data person surveying them at a conference how awesome you are.

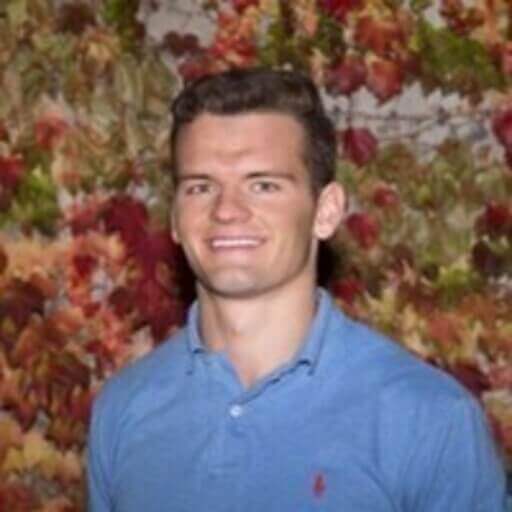

On average, stakeholders rated their data team a 7/10

When asked to rate their data team on a scale of 1 to 10, folks gave their data team an average rating of 7 out of 10. Not so bad, right? The data is slightly skewed, too, giving a median rating of 8.

Based on my experience working in data, and through conversations with others, this rating is slightly higher than I expected! Also, I noticed that whenever I asked this question to stakeholders, they’d glance over their shoulders to see if any of their data colleagues were listening. Perhaps they gave a higher rating to avoid confrontation. 😂 Here are the results:

|

Unless your organization is highly transparent and regularly conducts performance reviews, it’s hard to gauge how stakeholders feel about your team (without relying on random conference surveys like this one). Therefore, it’s important to periodically seek feedback from stakeholders on the team's performance.

Doing so will help foster a stronger relationship and fix issues before they grow too large. This feedback can come in many shapes and sizes: A Zoom call, a survey like this one, in person, you name it.

If you’re interested in getting quality feedback from stakeholder satisfaction, check out Caitlin Moorman’s blog or use the same survey I did.

“Getting regular feedback from your organization can help the data team to prioritize the work that will have the biggest impact on your stakeholders…A recurring survey about the work of the data team can provide insight into quiet successes, pain points, or areas of confusion, and over time enables you to measure improvements against a baseline trend.”

When you and your team actively and regularly seek out feedback, you can better understand how well you’re answering your stakeholders’ questions and anticipating their data needs.

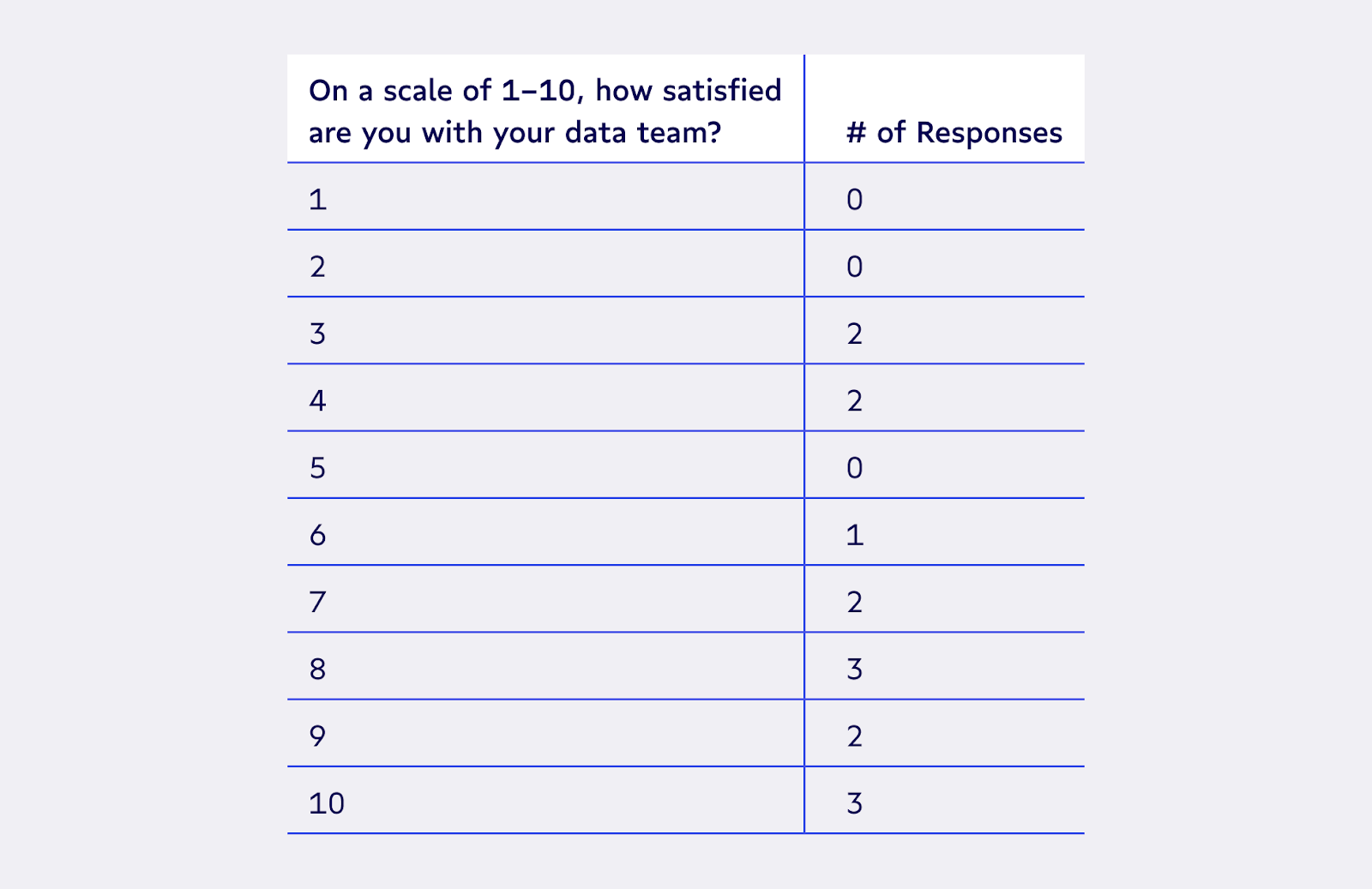

Stakeholders consistently said their data team is “too slow”

This response shouldn’t surprise you. If you’ve been in this field for any amount of time, you’ve likely heard this complaint, along with the others below.

This feedback can feel frustrating because there’s so much to unpack behind it. Stakeholders often assume the data team is “too slow” because they don’t understand your workload or process.

They often don’t see the higher-impact or time-sensitive requests that bump their “simple asks” down your to-do list. 📝 These same stakeholders don’t have insight into how dramatically different the time investment can be from project to project.

You know the feeling: One week, they might ask you for an analysis that’s a layup for you. ⛹️ The data needed for the analysis is already transformed and accessible, and you deliver it in a couple of hours. The following week they might ask for an entirely new analysis and expect the same turnaround time. Little do they know the data needed for this analysis has never been requested, you’ve never had access to it, and you end up working multiple weeks to deliver it.

|

At a previous company, my data team addressed complaints by holding weekly meetings for all stakeholders. In these meetings, we’d share our high-priority deliverables, explain their timelines, and address any stakeholder questions and complaints. These meetings help strengthen the relationship between both parties. 🤝

John Jimenez (Growth Analyst @ Storyblocks & member of The OA Club) uses a fantastic framework to gauge stakeholder satisfaction. He calls it the 30/60/90 feedback framework. Whenever he works on a stakeholder’s deliverable, he checks in at the following milestones:

- 30% - Am I on track?

- 60% - What else should we consider?

- 90% - Align with them on anything you may be missing

I imagine that any data team applying this framework would see an improvement in their stakeholders' 1-10 rating. These milestone check-ins communicate that you are making significant progress on the project and are consciously trying to solve the business problem.

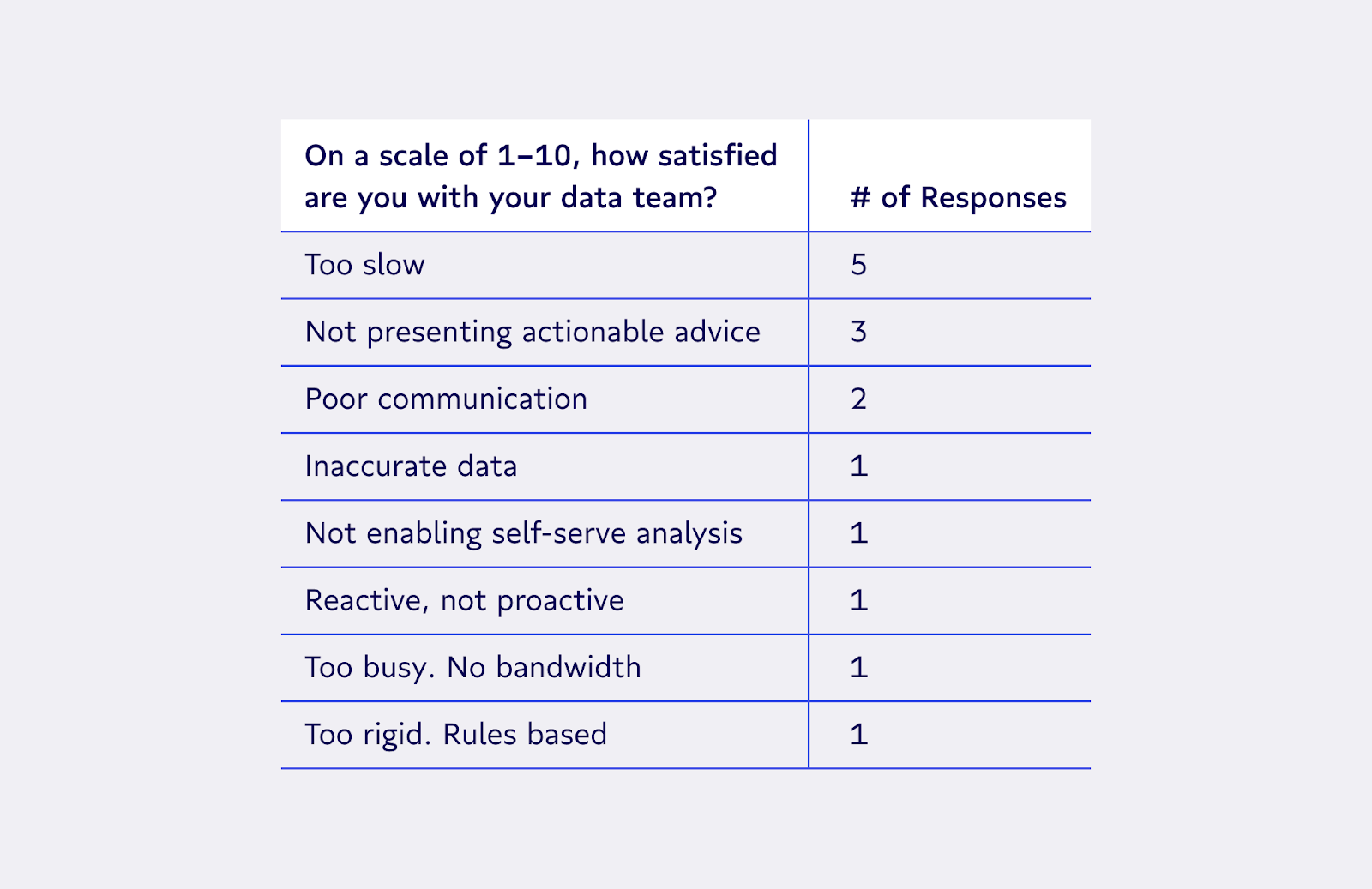

Well, I guess dashboards are still king…

Even though the modern data stack has enabled us to do so much more with data than dashboards alone (and the data community likes to dunk on dashboards 👀), stakeholders still love them — and that’s ok (really, I promise)! Check out the responses to the third survey question:

|

While the MDS crowd tends to bash endless dashboards, they serve an important purpose and can act as a valuable asset to help your stakeholders understand their performance, conduct simple analyses, and identify efforts to achieve their goals.

However, there’s so much value data teams can provide that stakeholders may not know about. Josh Richman ,Sr. Manager, Business Analytics at Flash and member of The OA Club, shares that if you master the basics for your stakeholders (dashboards, self-serve BI and straightforward analysis), you can be proactive and identify opportunities to make data a true partner in the business rather than a mere resource. I also like how Robert Yi describes proactive data teams in this article.

Dashboards are still valuable, and they are a key deliverable for data teams, but as Josh Richman described, once you’ve mastered it, you can begin other high-value work.

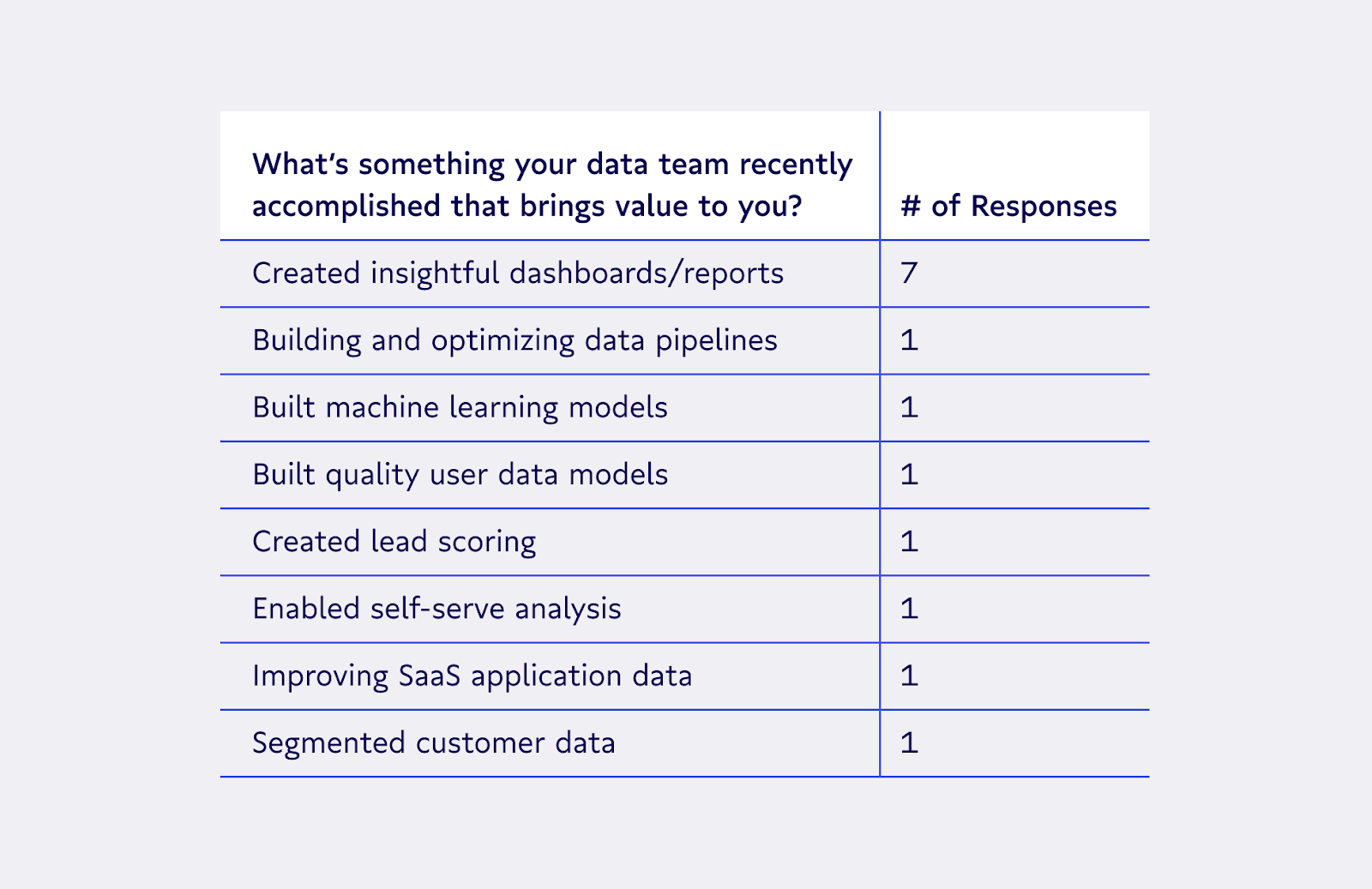

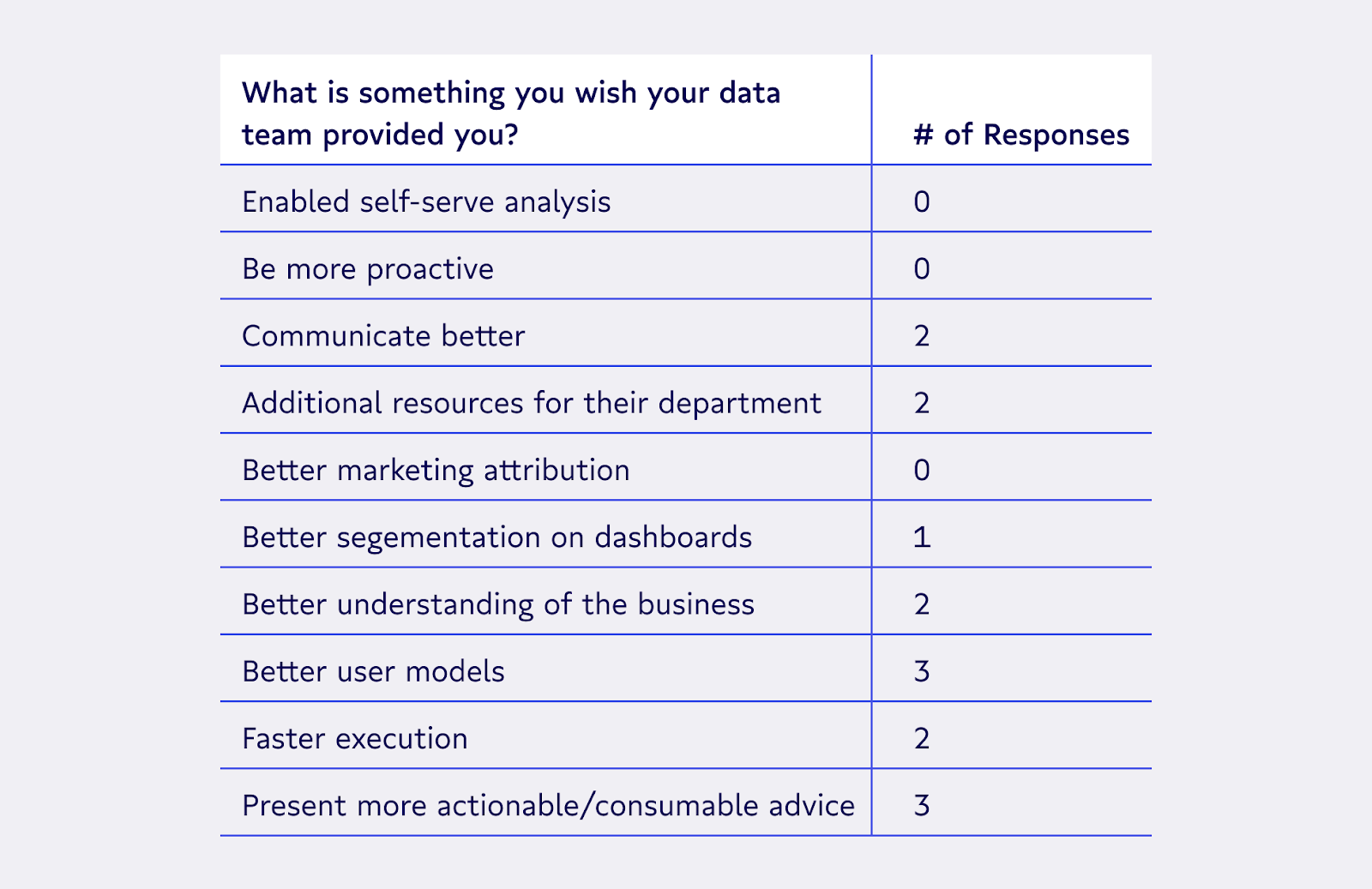

😲 Stakeholders actually want self-serve data?!

This response to “what is something you wish your data team provided you?” surprised me. Before I conducted this survey, I thought stakeholders would rank “being on time” or “better communication” as their top wish list item by a wide margin. Nope, instead, folks want better access and the ability to analyze data on their own.

|

It seems obvious, but every data person reading this article should explicitly ask their own stakeholders this question. It might change your efforts from “give a stakeholder data when they’re hungry” to “teach a stakeholder how to access and analyze data and feed them for a lifetime.”

|

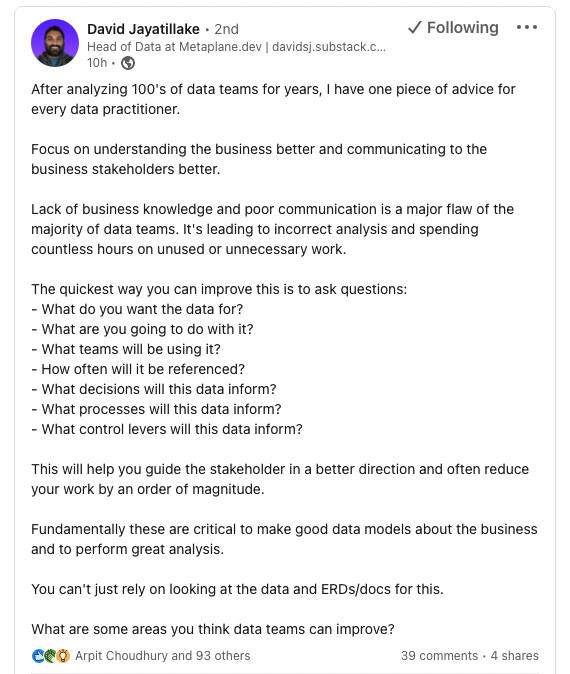

“Communicate better” came in with the second-highest number of responses — and rightfully so! I think we can all agree you can never be too good at communicating. It will improve your relationship with stakeholders and the business impact of your projects. David Jayatillake (head of data at Metaplane and member of The OA Club) touched on this recently in a LinkedIn post:

|

David’s #1 advice to data practitioners: Understand the business and communicate better. It will help you reduce time investment per project, and it will help you guide stakeholders in a better direction. This has definitely been true for me! Anytime I lack understanding of the business, or if there are issues with stakeholder communication, the work I produce is sub-par.

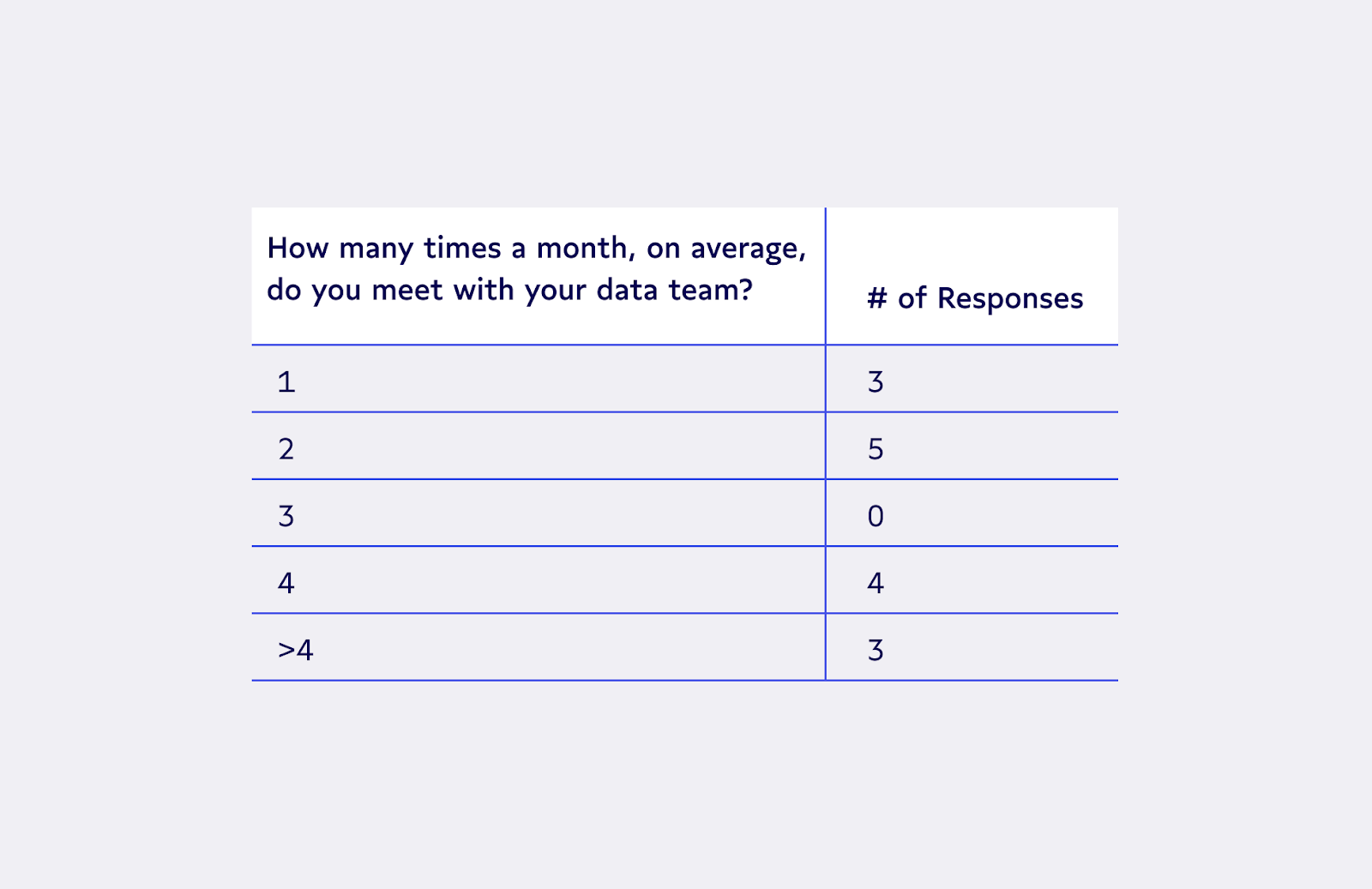

Stakeholders don’t meet weekly with their data teams?!

I was surprised to learn that the majority of respondents only meet one or two times a month! Going into this survey, I thought that all stakeholders met weekly with their data teams (or designated data team members).

|

I should’ve asked the respondents who met with their data teams <= two times a month why (I’ll do it next time!). My instincts tell me that they view their data team as a service department, not a product-like department. They meet with them on an ad hoc basis rather than on a recurring, weekly basis.

When a data team meets with stakeholders on a consistent, recurring basis, it’s a sign that there’s an actual partnership. Both parties are working hand-in-hand on short-term projects, as well as thinking long-term about how to consistently use data to drive business outcomes. 🚀 I like how this Singularis IT’s blog describes a partnership between data teams and stakeholders:

“Partners tend to form a more dynamic, long-term relationship with a business. It’s a partner who will actively work to grow and advance alongside you. A partner forms a relationship based on an understanding of your unique services, goals, and clients, and is ready to evolve alongside you. They ask questions and reevaluate their approach as needed.”

Data teams: Steer away from being a one-off service organization and become a partner with your stakeholders so you can grow and advance the business together.

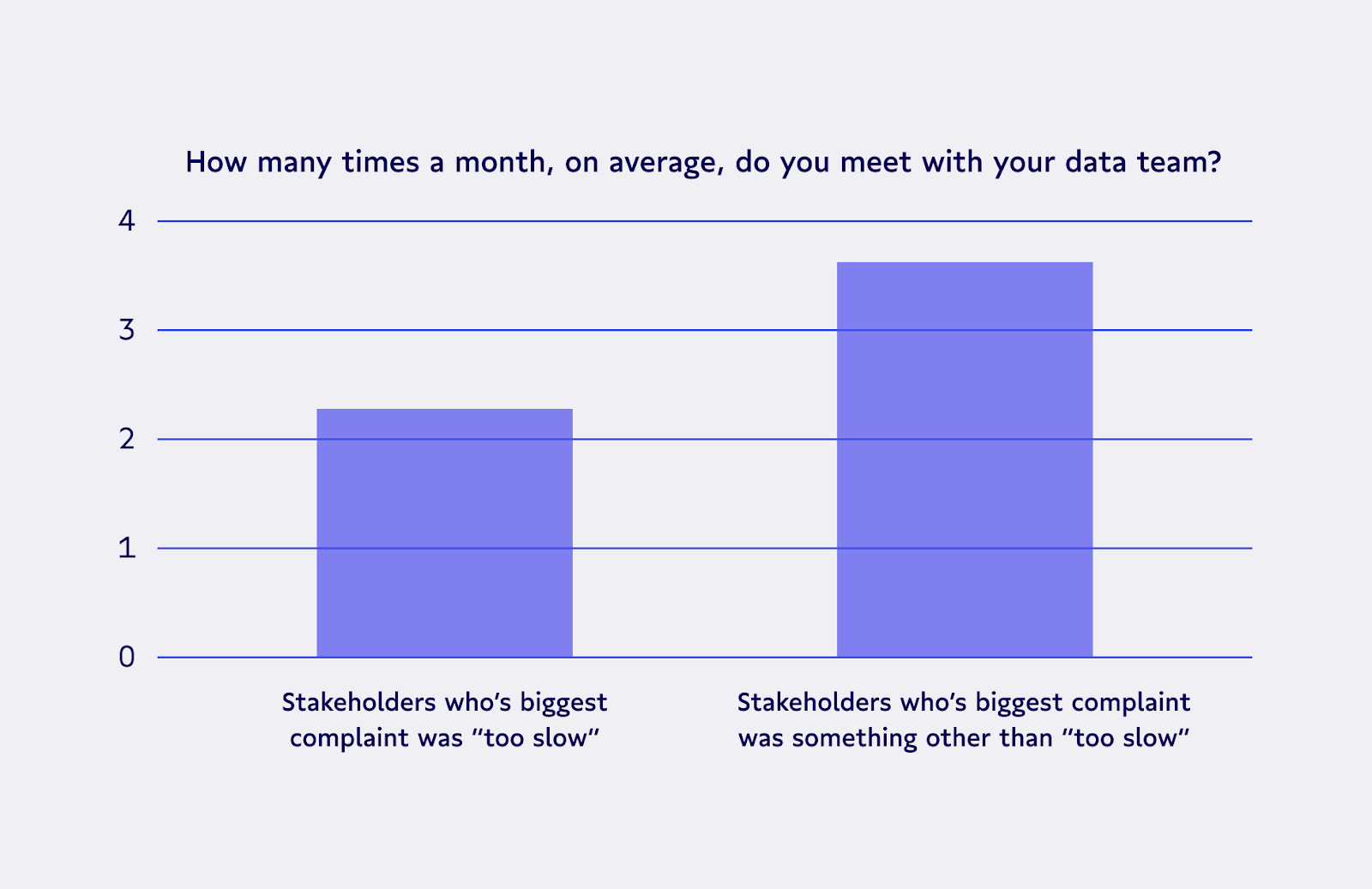

Putting responses in context: Meeting less with your stakeholders = them thinking the data team is slow

While analyzing each survey response, I uncovered something interesting: Respondents who said their biggest complaint about their data team being “too slow” met with their data team less than other surveyees.

|

What can we unpack here? I think that while it’s surprising, the reason is simple: The less you communicate with your stakeholders, the less they understand how busy you are, and the more likely they are to assume you’re working slowly versus working overtime.

It’s similar to a homeowner saying their contractor is “too slow” when, in fact, the homeowner just doesn’t see all the details that a contractor is working on at once. 🏠

We can solve this issue with our stakeholders through high-quality, frequent communication. I love how Irina Kukuyeva details high-quality stakeholder communication in her blog The “dark arts” of stakeholder management. She outlines how to get on the same page, how to set deadlines, how to execute check-ins, how to co-communicate unexpected roadblocks, and more.

“The biggest pain point when it comes to developing data products is -- not data! -- but cross-team alignment, whether that’s around communication styles, priorities, deadlines, deliverables, something else, or all of the above.”

|

There are many ways you can go about frequent and effective communication, but one that’s worked for me is the weekly stakeholder meeting I mentioned earlier.

Where to go from here: Take these findings to the real world

Surveying 15 stakeholders took me about a full day’s work, but I gained so much insight into what I thought stakeholders wanted versus what they actually wanted. If you work on a data team, I highly recommend asking your stakeholders similar questions. You’ll likely discover many things your team can change, which could dramatically improve the relationship with your stakeholders and your impact on the organization as a whole.

In summary, here’s what I learned from the experience:

- Assume less about your stakeholders. I thought I had all the answers to these questions before I asked them; I was wrong! For example, I didn’t know BI was still such a value add for stakeholders and that they actually wanted self-serve capabilities!

- Meet often with your stakeholders. Successful stakeholder management isn't about playing some 4D mental chess. It's about talking to the people using your data and understanding their responsibilities

If you'd like to repeat this survey with your team, check out my original questions here (and make your own local copy). 👉 Let me know how it went on the OA Club, LinkedIn, or Twitter)!