At Census, one of our product values is Just works™. This month (besides decorating our office for Halloween) we’ve focused on living up to this goal, and improving smaller chunks of the end-to-end sync experience with new features such as automatically adding new fields to syncs, improving our sync configuration UX, and adding more customization to scheduling and alerting.

🚀 Read on to learn about what we added to the product in October.

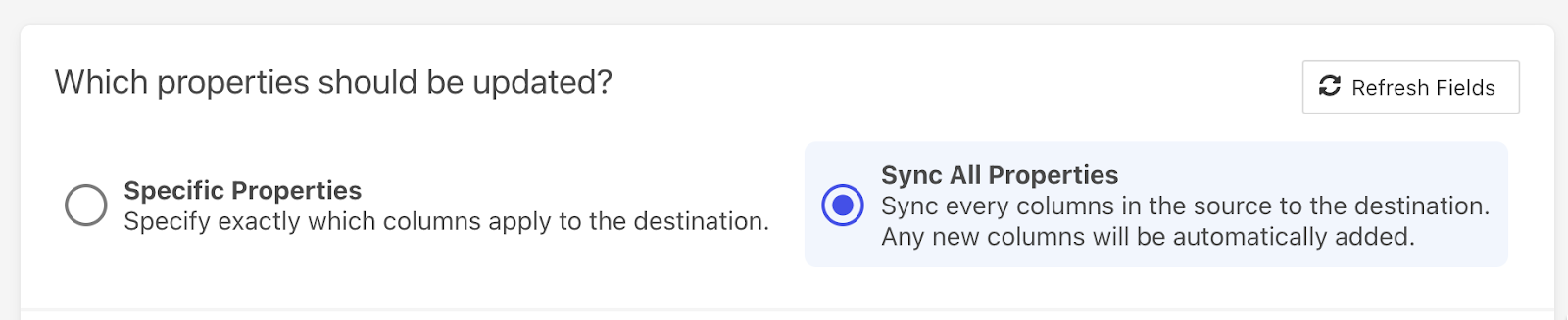

Set and forget: Automatically add new fields to syncs if they appear in the model

This one’s been a long time coming. You can now select “Sync all properties” when setting up your sync, and Census will automatically create any new field added to your model or table as a new field in the destination. You can even choose the naming case for the field name you want Census to create (e.g. UPPERCASE, CamelCase, etc).

Note: This is only supported for destinations that allow creating new fields via Census. See all supported destinations on the docs.

More powerful sync field configuration

If you’ve created or edited syncs lately, you’ve probably noticed some changes in the user experience. We're excited to announce that you can now customize sync behavior down to the field.

For update and create syncs, you can choose to customize sync behavior per field. For example, you can choose to only set a field value if the existing field is empty, rather than always overriding the existing value.

We've also flipped the mapper UI so database columns are on the left and destination fields are on the right to make it easier to understand the data flow.

👉 We want your feedback! Ping us via chat, on Slack or shoot us an email with your thoughts.

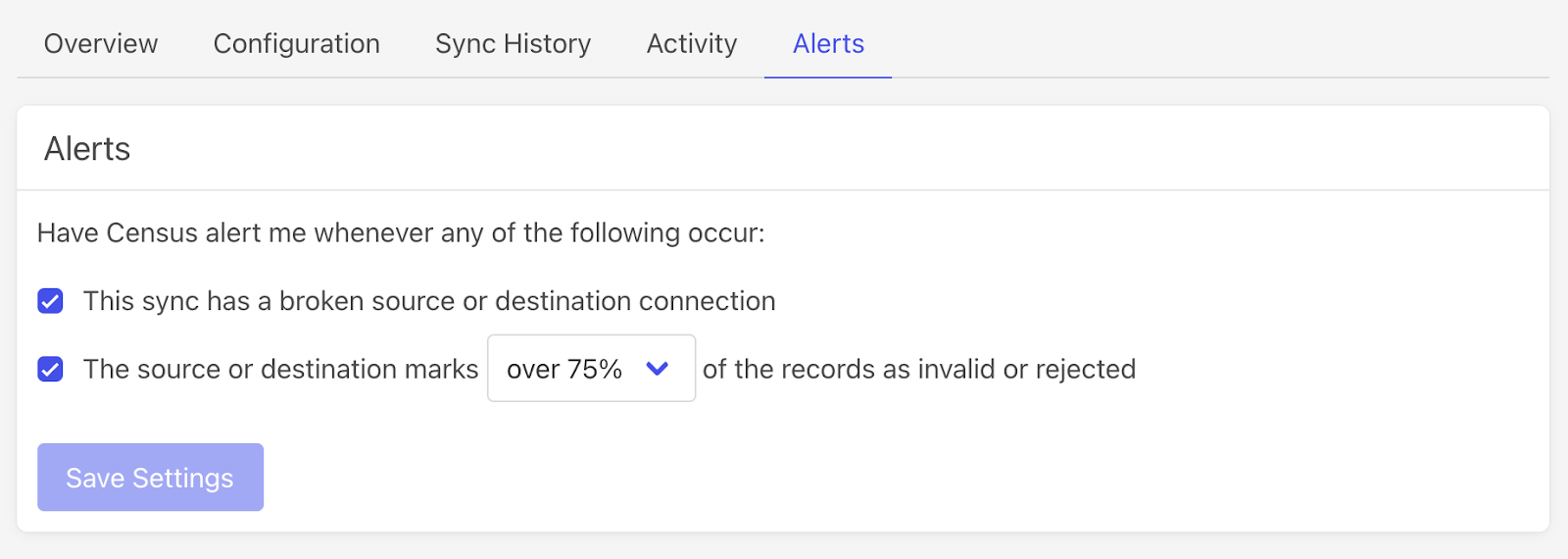

Avoid alert fatigue: Set thresholds for invalid record alerting

In addition to choosing to receive alerts for invalid or rejected records, you can also specify the threshold you want to be alerted on so you don't miss anything important.

This alert will now default to on for all syncs if over 75% of the records from the source are invalid or rejected by the destination.

You can set this trigger threshold to be lower, or even any, for those syncs you want to keep an extra eye on.

👉 Read the docs to learn more on alerts in Census.

Create syncs programmatically via POST /syncs API

We’ve extended the Census API to support creating syncs programmatically.

👉 Read the API docs to learn more.

Customize your schedule down to the exact hours and days with Cron

Want to schedule syncs to run hourly on weekdays only? Or how about once every four hours?

You can now specify your sync schedule with incredible granularity using a Cron expression.

👉 Read the docs to learn more.

Cancel running syncs

You can now hit pause during an actively running sync and choose to cancel the job. This will also pause the schedule so the sync won’t attempt to run again until you hit “Resume”.

S3 (New destination!)

Schedule secure data exports from your warehouse to CSV files in your S3 buckets. Read the docs to learn more.

SFTP (New destination!)

Send data from your warehouse to your SFTP server. Read the docs to learn more.

Pipedrive (Updated!)

Added support for deal and notes objects. Read the docs to learn more.

Braze (Updated!)

Added option to null mapped fields instead of deleting full records when using Mirror sync for the Braze user object. This makes it easier to manage audience attributes in Braze without worrying about API rate limits. Read the docs to learn more.

Mixpanel & Amplitude (Updated!)

Added support for Properties Bundle passthrough. Rather than mapping individual fields, you can now just pass a JSON object of properties directly through to Mixpanel or Amplitude (and coming soon for other event data destinations!). Read Mixpanel or Amplitude docs to get started.

❤️As always, a big thank you to you, our Census Champions, for all your feedback and for inspiring us with all you do with data. If you’ve made it this far, you can claim your free Census Champion swag pack.

Which one of these updates are you excited to try? Contact us with any questions or feedback, or get started in Census today.

See you next month for more. 🚀