You know you need some extra help with your data, but you don’t know who to hire. There are data analysts, data engineers, and now... analytics engineers?! 🤯

It’s easy to think that data engineers and analytics engineers are one and the same. After all, they sound so similar, right? But analytics engineers and data engineers have varying responsibilities. So which one is right for you and your organization comes down to your needs, where they sit in the business, and what skills are needed for the job.

TL;DR: Analytics engineers vs data engineers

Essentially, analytics engineers sit closer to the business and focus on the data itself while data engineers sit closer to engineering and focus more on the processes and infrastructure to properly deliver the data.

To put this into perspective, let’s say you are on the innovation team and are in charge of launching a new product. In this case, you’d work with a data engineer to ensure the right information is being displayed on the product page of the company’s website. You may also work with them to ensure traffic to this page is being tracked properly.

Once all that 👆 is solidified, you’d work with the analytics engineer to make sure the data points and metrics you need to track the product’s success are being ingested into the data warehouse. And if the data isn’t organized in a way that makes it usable to you, you would also work with them to craft a dataset that gives you the metrics you are looking for.

Make sense? Now, let’s further explore the business organization differences between analytics engineers and data engineers and their respective responsibilities.

Where do they sit in the business?

Analytics engineers typically sit between business and engineering teams on an analytics or data-specific team. They act as a liaison between the two teams since they are able to understand technical concepts as well as business concepts. That usually entails communicating with business stakeholders to understand their needs, then translating them into data models. For that reason, analytics engineers are also familiar with transactional database models, knowing how to ingest these into a warehouse.

The 🔑 differentiator with analytical engineers is business context. Because data models being built by analytics engineers are built with the business in mind, it’s important for them to not only understand different metrics but exactly how they’re used.

In contrast, data engineers typically sit on an engineering team. They rarely interact with business teams and instead communicate with analytics engineers. Their tasks are typically assigned by an intermediary – such as a Scrum Master or Project Manager – who decides what is most important for the business from an engineering perspective. At their core, data engineers are responsible for how data is collected into a transactional database as well as different integrations.

How do their skills vary?

While skills often vary depending on your organization and how large it is, these are the general differences in skills between the two roles.

Analytics Engineers

An analytics engineer role blurs the line between technology and business. While the responsibilities may differ greatly from company to company, at the very least, every analytics engineer should have the following proficiencies.

Data modeling

Analytics engineers have a deep understanding of data modeling or data transformation. This means they know how to piece together complex logic to create automated, reusable datasets that power your dashboards and reports. 🔌

SQL

While it's important for every data professional to know SQL, analytics engineers eat, sleep, and breathe SQL. Because it’s the main language used for data modeling and the core of many popular tools, SQL is what allows them to query the databases within your data warehouse and calculate KPIs.

Data warehousing

Analytics engineers own your data warehouse (or the location where all of your data is stored). They set up the architecture of the warehouse so that it’s properly optimized for use by analysts and your data visualization platform.

A large part of this warehousing is also understanding the proper roles and permissions that keep your data secure. 🔒 Popular data warehouses like Snowflake, Databricks, and BigQuery fulfill the same purpose, but each has its own unique features. With that said, chances are if you know one, you can easily learn how to use the others.

Modern data stack tools

A modern data stack consists of a few different pieces, but two of the main ones are ingestion and orchestration. Analytics engineers know exactly how to use these tools in order to move data around within the stack.

For ingestion, they need to have a familiarity with some of the most popular ingestion tools like Fivetran, Stitch, and Airbyte. Fivetran and Stitch are more enterprise-friendly tools with fairly simple setups while Airflow is an open-source tool that requires more technical expertise.

Analytics engineers must also have a familiarity with orchestration tools (or the tools that help deploy your data models to production). However, depending on your team, this could also be a skill the data engineers possess as well.

Interestingly, Airflow is more popular with data engineers for orchestration while other tools like Prefect and Dagster seem to be preferred by analytics engineers. But really, the preference just depends on your experience and specific coding skills. 🤷

dbt

dbt is a modern data stack tool used for data transformation. This tool combines an analytics engineer’s knowledge of data modeling and SQL, while also offering unique features to make modeling easier.

It helps an analytics engineer create modular, efficient, and easy-to-read data models while reducing repeat code with advanced functions like macros and other packages you can easily install.

Fun fact: dbt actually created the role of an analytics engineer! 😮

Analytics dashboards

While it can be argued that dashboards are the main responsibility of data analysts, analytics engineers are also skilled in creating visualizations using popular tools like Tableau, ThoughtSpot, and Looker. After all, it is their data models that end up powering them. For that reason, it’s important for analytics engineers to understand how to take these datasets and then use them to display data in the way stakeholders want and need.

Data Engineers

Like analytics engineers, the responsibilities of a data engineer will usually vary depending on the type of company that they work for and the specific industry, but they can broadly be categorized into three main categories: Generalist, pipeline-centric, and database-centric. Regardless of which category they reside in, however, every data engineer should have the following skills.

Python

As a data engineer, it’s essential that you know Python. This is the language typically used in orchestration tools like Airflow, Dagster, and Prefect, but it’s also commonly used for API development, interactive testing, and scripting. Luckily, it is one of the easiest to understand and learn, making it so popular in the data world.

DevOps

With data engineering comes delivering applications and ensuring they are properly deployed to production. Depending on the responsibilities within your team, and how big your team is, the data engineer may be in charge of pushing code changes.

If not, they at least need to be familiar with cloud computing services like AWS, Google Cloud, and Azure. Knowledge of at least one of these platforms is needed to host any services supporting different applications. In addition, knowledge of Kubernetes, an open-source container orchestration service, is common among data engineers who do a lot of DevOps work.

Bash

Bash is a command line language that makes navigating between directories and editing files easier. It’s often used in deployment scripts in DevOps, allowing for the automation of otherwise time-consuming jobs.

Git

Git is a version control language that helps track code changes so engineers can easily save and collaborate on code within their respective teams. It’s also a great tool to use as “best practice” in case incorrect code is pushed to production and deployment needs to be reverted.

Orchestration tools

As mentioned before, orchestration tools such as Airflow, Dagster, and Prefect are also important for data engineers to be familiar with. Depending on your team and the skillsets of each member, this could be the responsibility of either the data engineer or an analytics engineer. Honestly, it really just depends on your organization. However, because data engineers are more likely to have knowledge of Python, which these platforms use, it is more likely for this to fall into the hands of the data engineer.

Who should I hire? 🤔

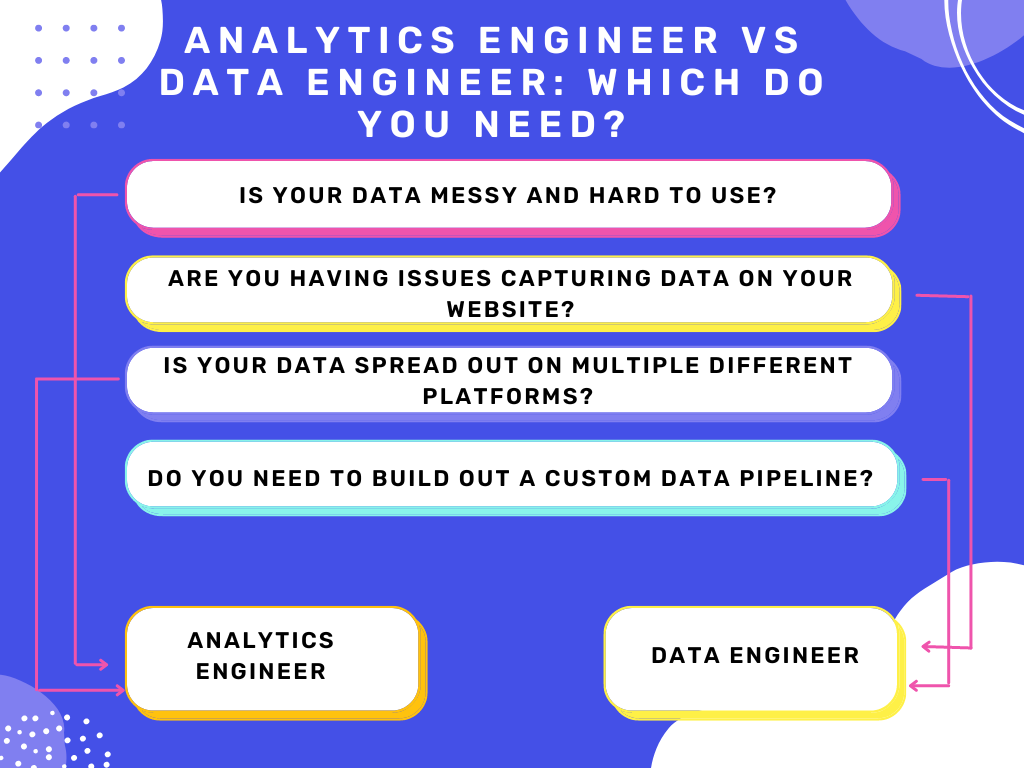

Now for the fun part. Which role is right for your team? In general, here's a helpful visual to break down the differences. 👇

While the lines between these roles can be blurry, you can consider these distinctions when you consider what you're trying to accomplish and the specific pain points you hope to resolve.

Is your data messy and hard to use? → Analytics Engineer

Suppose you’re experiencing data quality issues – anything from incorrect data, to missing data, to no data at all – that make your data hard to use. In that case, you’ll want to hire an analytics engineer.

Analytics engineers own the data pipeline from ingestion to visualization, so they’re the ones that will closely monitor data to ensure it meets the company’s standards. ✅ In other words, if there’s missing or incorrect data, they’re likely the first ones to know about it. An analytics engineer can implement testing through tools like dbt and re_data, set up alerting, and put in place preventative measures to ensure data always looks as expected.

Are you having issues capturing data on your website? → Data Engineer

Data engineers typically focus on the backend processes of the website that help to capture all of the important customer data. If you aren’t collecting this data, or are having trouble collecting it, you’ll need to hire a data engineer.

Analytics engineers typically work with the data after it’s already captured, working on moving it from point A to point B. 📍 Data engineers, on the other hand, can help code the systems and processes to ensure this data is being sent to a place where the data can then be properly used by analytics.

Is your data spread out on multiple different platforms? → Analytics Engineer

If you’re having trouble establishing a single source of truth for your data, then you’ll want to hire an analytics engineer. Analytics engineers own the data warehouse that acts as a company’s single source of truth for all things data. They ingest data into this warehouse from all different sources, clean the source data, and then write the basic data models. Among other things, they’ll help you properly document and consolidate your data sources so that your metrics and KPIs are consistent across all areas of the business. 👍

Do you need to build out a custom data pipeline? → Data Engineer

While this could be up for debate, if you’re looking to build a custom data pipeline, it would be best to hire a data engineer because they typically have more experience with tools like Airflow that require deep knowledge of Python, DAGs, and cloud infrastructure.

Building a custom data pipeline can get quite technical and complicated for someone with less data engineering experience. While some analytics engineers can definitely take this on, they are typically more comfortable with more low-maintenance data pipeline tools such as Prefect and Dagster.

Choosing the best engineer for the job 👷♂️

Understanding the pain points you’re trying to solve will help you hire the right type of data expert for your organization – and make the hiring process more efficient along the way.

Remember: Analytics engineers focus on the data itself, looking at issues like data quality, freshness, and proper arrival time. They own the data from ingestion to orchestration. Alternatively, data engineers focus on the data infrastructure of the company site and systems, and the tools supporting the data pipelines.

While this article is a great overview of the differences between these two roles, keep in mind that this varies from business to business. So, talk with your data and technologies teams to better understand the gaps that exist and who would be best to fill them. Better yet, send them this article and have them point out the scenarios and specific skills they would find useful to support the work that needs to be completed for the business. Happy hiring! 😄

✨ Want to join the Census team? Come work on Reverse ETL with us! We’re hiring across all departments.