Customer Data Platforms (CDPs) promise a quick win with your data. As an off-the-shelf data solution, CDPs offer to do the hard work for you—generate customer data from your products and platforms, model it, and deliver it to your tools of choice. They promise to provide a single source of truth right out of the box, so that the coveted Customer 360 is not only possible, but easily attainable. 👀

This all sounds wonderful, but behind the shiny promises are fundamental problems with the way CDPs operate which can set data teams and marketers back where they started, or worse.

Choosing a packaged CDP as your primary data platform can leave you with an incomplete data set, data you can’t trust, and a vendor you’re locked into. Let’s dive deeper into one example with Segment, and explore the challenges of working with a CDP up close. 🤔

Segment’s customer data platform

Segment started out as 400 lines of javascript that could send data to just eight destinations: Google Analytics, KISSmetrics, Mixpanel, Intercom, Customer.io, CrazyEgg, Olark, and Chartbeat. Several years and product iterations later, Segment transformed into a customer data platform, promising to make it easy to send data to hundreds of destinations.

Segment’s product messaging lists several benefits that appeal to marketing teams and the wider organization. The ability to build a single customer view, personalize the customer experience in real-time, and segment your audience based on behavioral data. These are all great use cases for the marketing team, but Segment and traditional CDPs often fail to live up to their promises—leaving behind a disjointed, incomplete data set you can’t put to good use.

Low visibility of your customers

The richness of the data you can collect with Segment is highly limited compared to best-in-class data generation tools. If you’re working towards building a cohesive single customer view, the more data points and context you can gather behind user behavior, the better. Ideally, you’ll have plenty of data to help you identify users or buyers on the individual level by joining together user identifiers across all your platforms and products.

But event data from Segment is limited to a small number of data points per event, compared to 100+ data points possible through a dedicated behavioral data platform (more on that later). This limits your ability to develop a comprehensive understanding of your customers—worse still, you could be missing key data to inform your marketing strategy. Limited behavioral data means your view of the customer could be incomplete, fragmented, or just plain wrong.

No control over your data, or data quality

CDPs like Segment are essentially “black boxes”, leaving you in the dark about how your data is collected and transformed. That’s a major red flag. 🚩 Without any say over how data is generated, you can’t account for its quality or usability. You also can’t decide how to track your key events, or what types of data should be collected. 🤨

This might not be a problem if you’re just sampling data. But for more complex use cases, such as personalization, lack of control is a big deal. You’re forced to work with Segment’s predetermined data structures, which may not necessarily fit with your business model or match your expectations for data quality.

Segment also siloes off your raw data, holding it within its platform and limiting your ability to unify your data across multiple platforms and products to get a single source of truth. And since you’re not in control of your data collection, you can’t easily mitigate privacy measures such as browser restrictions and ad blockers. You’re left with major blindspots since you have little to no visibility over key customers using browsers that block tracking, like Safari and Firefox. 🦊

This lack of control severely limits your ability to analyze and/or work with the data and, ultimately, could lower your confidence in your data’s integrity.

Locked in, with no ownership

Since Segment operates on a SaaS model, your data is locked into a platform you don’t own or control. If Segment suffers an outage or is compromised in any way, your data is vulnerable since it’s held in their cloud environment. ☁️

No big deal... Segment can be trusted with your customers’ data, right? But alarmingly, software companies experience on average 12 incidents of unplanned application downtime every year. At the time of writing, Segment has reported 9 disruptions to their services, just this month.

Every minute data goes missing could be costing your business thousands of dollars, depending on how you use data and the scale of your operation. Even in the best-case scenario, any data loss event is a major concern for customers who are increasingly conscious of their privacy, and how their data is stored and used.

Then there’s the vendor lock-in itself. If you want to expand your data volumes, Segment’s scaling price structure can quickly become expensive. But Segment’s platform is not designed to be flexible or make it easy for you to evolve your infrastructure. This can leave you with an eye-watering bill if your volumes increase significantly. 💸

Snowplow’s behavioral data platform

Created in 2012, Snowplow emerged as an open-source data collection tool in the early days of data warehousing. Redshift had just been launched, and many hacky, one-man-band data Macgyvers adopted Snowplow as a free, versatile solution for tracking events across web and mobile. 💪

Fast forward to today, and Snowplow is a robust, flexible data solution, trusted by companies like Strava, Autotrader, and Gousto to create, enhance, and model high-quality first-party customer behavioral data. Let’s take a look at what makes Snowplow the platform of choice for data generation compared to CDPs like Segment.

Rich, behavioral first-party customer data

Snowplow generates and delivers super rich and granular behavioral first-party customer data into your warehouse or data lake, made up of 100s of data points to give you an overview of what your customers are doing. A single event from Snowplow can give you a wealth of insight into customer behavior, who they are, where they’re based, and how they’re interacting with your product, web page, or even in-store purchases. 🕵️

Because Snowplow delivers data in a raw, unchanged, “unopinionated” format, it’s in an ideal state for combining with other data sets to build a truly unified view of the customer. You’re also able to model the data in a way that makes the most sense for you and your business, rather than relying on the prepackaged data that comes from a CDP. The end result is a rich, powerful data set that can help you deeply understand your customers and prospects, without missing data or gaps in your customer 360.

Unmatched data quality

When it comes to how you track your data, Snowplow puts you in the driver's seat. 🏎️ Snowplow lets you structure your event data with self-describing schemas— a bit like writing a blueprint for the type of events you want to track before you track them.

That means you get a highly “expected” data set by the time your data lands in the warehouse. In other words, your tidy, well-structured data will need very little (if any) cleaning, which is a joy for data teams and analysts.

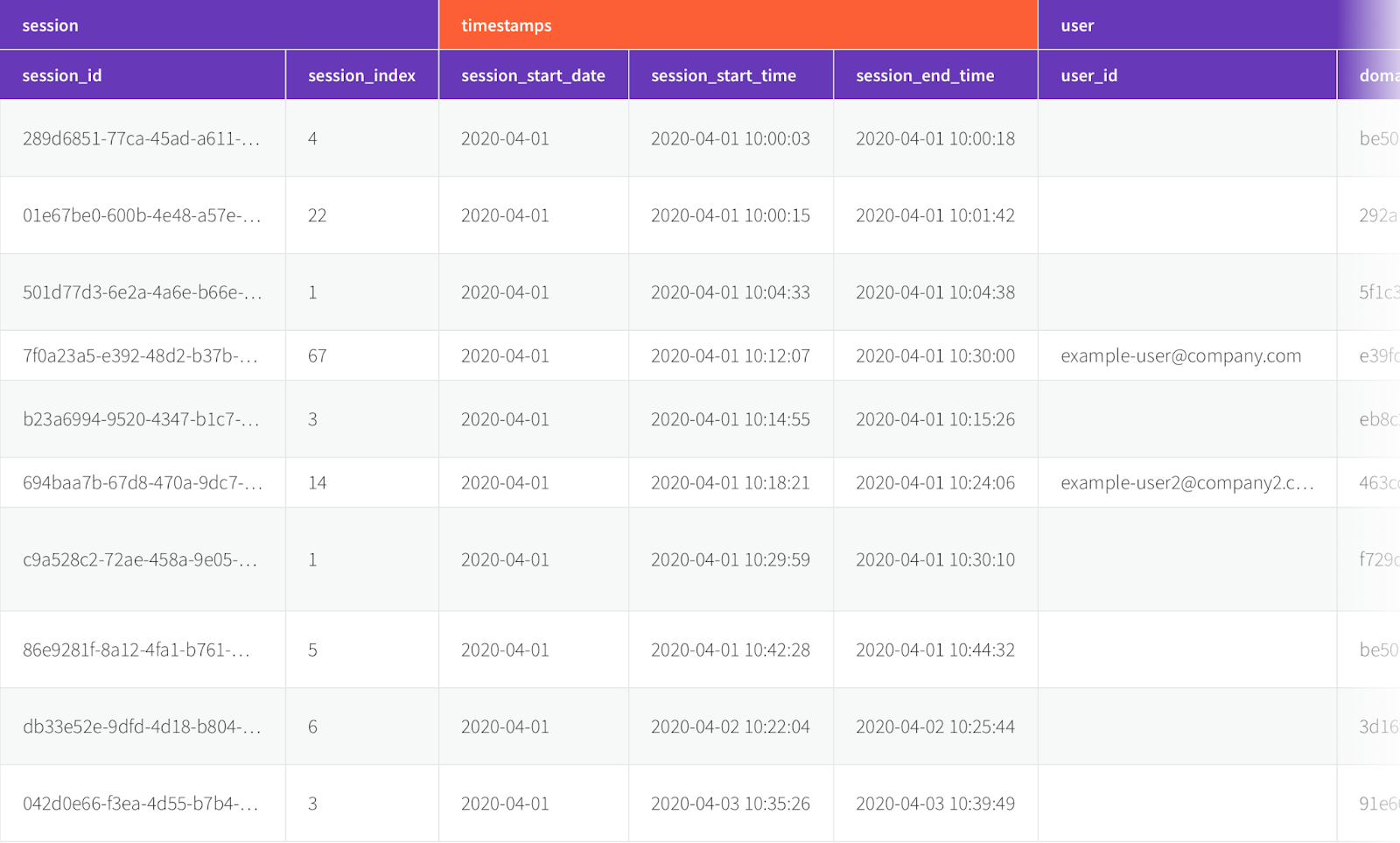

|

Unlike with Segment, the quality of your Snowplow data is something you can control and rely on. In fact, Snowplow data can be optimized to a point where you can trust it for advanced data use cases like feeding machine learning models or building predictive analytics engines.

The best part about Snowplow’s infrastructure is that it’s highly adaptable. So as you evolve your product or want to track new features, you can adapt your schemas to ensure no loss of data or compromise to quality over time.

Flexibility and total data ownership

Unlike Segment and other CDPs, it’s possible to run Snowplow natively in your cloud environment, so you can have total ownership of the end-to-end pipeline.

Essentially when you use Snowplow, you know you’re not sending data off to any third party where its security and governance are outside of your control. Everything lives under your roof, so to speak, and your data, your customers’ data, and sensitive information, never leave the safety of your own environment. 🔒 For large companies and/or those who have privacy concerns, this is massive.

Better together: Snowplow and the modern data stack

Since Snowplow is the best-in-class tool for creating first-party customer behavioral data, it’s perfectly suited to work alongside other platforms to form a complete data stack. Building a stack of purpose-built tools has a lot of advantages, including the freedom to piece together your end-to-end data pipeline, and the ability to deliver extremely high-quality data into your tools of choice. 🤖 This not only solves your tooling problem, but more importantly, Snowplow supports solving the customer data problem.

Unlike a CDP, which assumes the role of data collection, transformation, and activation, you’re free to combine Snowplow with tools like dbt to model your data on your own terms and build a centralized source of truth in your data warehouse. From there, you’re laying a path to democratize your data, opening it up to multiple teams in the organization from marketing and sales, to the product team, and so on.

In this way, rather than buying a CDP that siloes off your data and limits your control, you’re composing one yourself. This unlocks countless opportunities for your data. And when your behavioral customer data is centrally owned and governed in the warehouse, you’re in the best position possible to govern it properly, combine it with other data sets (sales data, for example), and use tools like Census to make it operational to frontline teams.

Instead of being siloed away in a black box, your data should be centrally owned and available. That’s a reality with Snowplow, while it's much more difficult — if not impossible — with CDPs like Segment.

Behavioral first-party customer data in minutes

Since the recent launch of BDP Cloud, it’s now easier than ever to deploy Snowplow technology hosted and managed by Snowplow. This means it’s possible to benefit from Snowplow’s robust infrastructure without the barriers of setting up your own cloud environment or the time it takes to host Snowplow yourself. 🔥

Technology like BDP Cloud brings the speed of access and ease of use that traditional CDPs like Segment promise, but with no loss of integrity. It makes choosing future-proof solutions like Snowplow an even easier choice for delivering data that, unlike CDPs, keeps its promises. ✅

⚡ Join the 1.9 million websites and apps already using Snowplow and start harnessing the power of first-party customer data today.

🚀 And when you want to activate all that first-party data, turn to Census. Book a demo with a product specialist to find out how.